The following is excerpted from Chapter 10 of my book.

Environmentalism, with its attendant army of politicos all armed to the teeth with environmental laws, is, let us make no mistake, the highroad to hell.

Before going all the way green, I urge you to take a longer look into exactly what horse you’re backing here: it may well turn out to be a horse of an entirely different color than you think.

Environmentalism is a philosophy that upholds a profound hatred of humankind:

“Human beings, as a species, have no more value than slugs” (John Davis, editor of Earth First! Journal).

“Mankind is a cancer; we’re the biggest blight on the face of the earth” (president of PETA and environmental activist Ingrid Newkirk).

“If you haven’t given voluntary human extinction much thought before, the idea of a world with no people in it may seem strange. But, if you give it a chance, I think you might agree that the extinction of Homo Sapiens would mean survival for millions, if not billions, of Earth-dwelling species…. Phasing out the human race will solve every problem on earth, social and environmental” (Ibid).

Quoting Richard Conniff, in the pages of Audubon magazine (September, 1990): “Among environmentalists sharing two or three beers, the notion is quite common that if only some calamity could wipe out the entire human race, other species might once again have a chance.”

Environmental theorist Christopher Manes (writing under the nom-de-guerre Miss Ann Thropy): “If radical environmentalists were to invent a disease to bring human population back to ecological sanity, it would probably be something like AIDS.”

Environmental guru “Reverend” Thomas Berry, proclaims that “humans are an affliction of the world, its demonic presence. We are the violators of Earth’s most sacred aspects.”

A speaker at one of Earth First!’s little cult gatherings: “Optimal human population: zero.”

“Ours is an ecological perspective that views Earth as a community and recognizes such apparent enemies as ‘disease’ (e.g., malaria) and ‘pests’ (e.g., mosquitoes) not as manifestations of evil to be overcome but rather as vital and necessary components of a complex and vibrant biosphere … [We have] an antipathy to ‘progress’ and ‘technology.’ We can accept the pejoratives of ‘Luddite’ and ‘Neanderthal’ with pride…. There is no hope for reform of industrial empire…. We humans have become a disease: the Humanpox” (Dave Foreman, past head of Earth First!)

“Human happiness [is] not as important as a wild and healthy planet. I know social scientists who remind me that people are part of nature, but it isn’t true. Somewhere along the line we … became a cancer. We have become a plague upon ourselves and upon the Earth…. Until such time as Homo Sapiens should decide to rejoin nature, some of us can only hope for the right virus to come along.” (Biologist David Graber, “Mother Nature as a Hothouse Flower” Los Angles Times Book Review).

“The ending of the human epoch on Earth would most likely be greeted with a hearty ‘Good riddance!'”(Paul Taylor, “Respect for Nature: A Theory of Environmental Ethics”).

“If we don’t overthrow capitalism, we don’t have a chance of saving the world ecologically. I think it is possible to have an ecologically sound society under socialism. I don’t think it is possible under capitalism” (Judi Bari, of Earth First!).

“Isn’t the only hope for the planet that the industrialized civilizations collapse? Isn’t it our responsibility to bring that about?” (Maurice Strong, Earth Summit 91).

David Brower, former head of the Sierra Club and founder of Friends of the Earth, calls for developers to be “shot with tranquilizer guns.”

Why?

“Human suffering is much less important than the suffering of the planet,” he explains.

Also from the socialist Sierra Club: “The goal now is a socialist, redistributionist society, which is nature’s proper steward and society’s only hope.”

Quoting the Green Party’s first Presidential candidate Barry Commoner:

“Nothing less than a change in the political and social system, including revision of the Constitution, is necessary to save the country from destroying the natural environment…. Capitalism is the earth’s number one enemy.”

From Barry Commoner again:

“Environmental pollution is a sign of major incompatibility between our system of production and the environmental system that supports it. [The socialist way is better because] the theory of socialist economics does not appear to require that growth should continue indefinitely.”

So much for your unalienable right to life, liberty, and the pursuit of happiness. Indeed:

“Individual rights will have to take a back seat to the collective” (Harvey Ruvin, International Council for Local Environmental Initiatives, Dade County Florida).

Sierra Club cofounder David Brower, pushing for his own brand of eugenics:

“Childbearing [should be] a punishable crime against society, unless the parents hold a government license. All potential parents [should be] required to use contraceptive chemicals, the government issuing antidotes to citizens chosen for childbearing.”

That, if you don’t know, is limited government environmentalist style.

“There’s nothing wrong with being a terrorist, as long as you win. Then you write history” (Sierra Club board member Paul Watson).

Again from Paul Watson, writing in that propaganda rag Earth First! Journal: “Right now we’re in the early stages of World War III…. It’s the war to save the planet. The environmental movement doesn’t have many deserters and has a high level of recruitment. Eventually there will be open war.”

And:

“By every means necessary we will bring this and every other empire down! Mutiny and sabotage in defense of Mother Earth!”

Lisa Force, another Sierra Club board member and quondam coordinator of the Center for Biological Diversity, advocates “prying ranchers and their livestock from federal lands. In 2000 and 2003, [Sierra] sued the U.S. Department of the Interior to force ranching families out of the Mojave National Preserve. These ranchers actually owned grazing rights to the preserve; some families had been raising cattle there for over a century. No matter. Using the Endangered Species Act and citing the supposed loss of ‘endangered tortoise habitat,’ the Club was able to force the ranchers out” (quoted from Navigator magazine).

It is a sad fact for environmentalists that in free societies, humans are allowed to trade freely.

Among other things, the right to private property means: that which you produce is yours by right.

Private property is the crux of freedom: you cannot, in any meaningful sense, be said to be free if you are not allowed to use the things that you own, including those things necessary to sustain your life. Everything you need to know about a political ideology is contained in its attitude toward property.

It comes as no surprise therefore to learn that “private property,” in the words of one environmental group, “is just a sacred cow” (Greater Yellowstone Report, Greater Yellowstone Coalition.)

That is also known as socialism.

In 1990, a man named Benjamin Cone Jr. inherited 7,200 acres of land in Pender County, North Carolina. He proceeded to plant chuffa and rye for wild turkeys; he conducted controlled burns on his property to improve the habitat for deer and quail. And he succeeded: in no time, that habitat flourished. Inadvertently, however, he attracted a number of red-cockaded woodpeckers, a species listed as endangered. He was warned by a certain governmental agency that, on threat of imprisonment or stiff fines, he was not allowed to disturb any of these trees, which were all on his property. This put 1,560 acres of his own land off-limits to him, the owner. In response, Benjamin Cone Jr. began clear-cutting the rest of his land, saying: “I cannot afford to let those woodpeckers take over the rest of my property. I’m going to start massive clear-cutting.” (Richard L. Stroup, Eco-nomics p. 56-57.)

Socialist Eric Schlosser, author of the embarrassing Fast Food Nation, makes no secret of his statist agenda. As Doctor Thomas DiLorenzo points out, Schlosser lauds the “scientific socialists” (a generic term coined by comrade V.I. Lenin) and everything they stand for: government intervention and bureaucracy, public works, job-destroying minimum wage laws, OSHA regulations, food regulations, regulatory agencies to control ranching, farming, and supermarkets, bans on advertising and much more. Only then, he says, will that great day come when restaurants exclusively sell “free-range, organic, grass-fed hamburgers” (Fast Food Nation: The Dark Side of the All-American Meal).

All of which is simply by way of saying that individual consumers should not be allowed to choose what we want to eat, and that the supply of free-range hamburgers should not be determined by demand. Rather, by law, government bureaucrats must do this for us, regardless of whether we personally want to eat organic, grass-fed beef.

Colorado congressman Scott McInnis confessed that four firefighters burned to death in Washington state because bureaucrats took 10 hours to approve a water drop. The reason: using local river water is prohibited by the Endangered Species Act, on the grounds that it may threaten a certain kind of trout.

Further proof of the Sierra’s hatred of humanity can be found in their 1995 attempt to block an Animas River water diversion project, which project was designed to bring water to Durango and the nearby Ute Indian Reservation.

Dams and irrigation are often life-and-death matters in the arid west, a fact of which Sierra is well aware. Thus, after successfully getting the project slashed by more than 70 percent, thereby depriving the Ute Reservation of much-needed water, the Sierra Club lawyers went for the jugular: they demanded the project be cut still more.

Fortunately for the rest of us, they overplayed their hand.

Their shady methods and motives prompted the following quote from Senator Ben Nighthorse Campbell:

“The enviros have never been interested in a compromise. They just simply want to stop development and growth. And the way you do that in the West is to stop water.”

From a chairwoman of the Ute Indian tribe: “The environmentalists don’t seem to care how we live.”

Greenpeace is worldwide the largest and wealthiest environmental group. Of their co-founder Dave McTaggart, fellow co-founder Paul Watson said this:

“The secret to David McTaggart’s success is the secret to Greenpeace’s success: It doesn’t matter what is true, it only matters what people believe is true. You are what the media define you to be. Greenpeace became a myth, and a myth-generating machine.”

And since rather than addressing the actual data, environmentalists believe that citing the source of funding is the only argument one ever needs to refute a counterargument, environmentalists should be extraordinarily persuaded by this very partial list of Greenpeace’s funding.

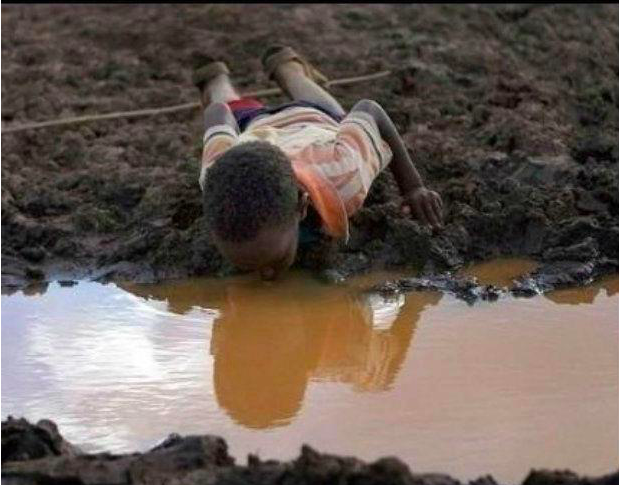

Most people have no inkling that throughout Greenpeace’s tireless campaign against “Frakenfood” (i.e. biotech food – “Frakenfood” is a word coined by Greenpeace campaign director Charles Margulisto, who hates technology), the Third World has steadily perished from malnutrition and famine, as a direct result thereof.

Quoting Tanzania’s Doctor Michael Mbwille (of the non-profit Food Security Network):

“Greenpeace prints and circulates lies faster than the Code Red virus infected the world’s computers. If we were to apply Greenpeace’s scientifically illiterate standards [for soybeans] universally, there would be nothing left on our tables.”

(For an example of how to successfully expose Greenpeace’s lies, please read this relevant article.)

Candidly, I haven’t even begun.

And yet from this small sampling, you can probably get an idea of what an exceptionally gracious and non-politically motivated folk these environmentalists and environmental leaders are. Indeed, environmentalism is a benevolent and life-affirming philosophy, and the people who populate it are a kind, non-violent people, whose reasoning is sound and scrupulous, and who believe unreservedly in the individual’s inalienable right to life and property.

There is of course only one real problem with all that: these people are hypocrites, and environmentalism worships at the shrine of death.

The entire movement, replete, as it is, with its politicos and environmental politics, is not simply “wrong.” That would be too easy.

The environmental movement is criminal.

Reader, if you have even a vestigial love of freedom within you, you must denounce environmentalism with all your heart. You must see it for what it actually is: a statist philosophy of human-hatred and enslavement.

Environmentalism is neo-Marxism at its blackest.

More here on the toxicity of environmentalism.

Johann Bessler was born in Zittau, Germany, in 1680. He died in 1745.

Johann Bessler was born in Zittau, Germany, in 1680. He died in 1745.